Facial detection possibilities

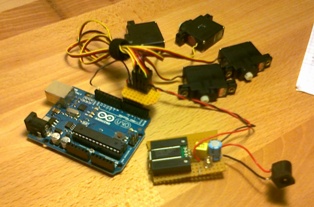

The first choice we had to make was whether to use a computer to run the facial recognition or to use a dedicated microcontroller that is able to do this independently. A usable device that we found is the CMUcam3. Pros and cons can be found in the table bellow.

| CMUcam3 | Computer and webcam |

|---|---|

| + Everything is enclosed in the doll itself | - An external computer is needed |

| + The software is already installed | - We have to find a usable facial recognition software |

| - Face detection is very slow otherwise only colour tracking | + Face detection is fast enough (around 20Hz) |

| - The communication between the microcontroller and the Arduino board can be a problem | + We need a protocol to establish communication but we know that it exists |

Principle of motion of the neck and eyes

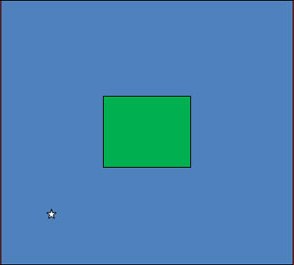

The image above symbolizes the screen of the webcam. In order to move naturally we divided it in two parts. The blue part shows when we need to move the eyes. If eyes are already at their maximum position in the given direction, the neck will rotate. These two actions are undertaken in order to have the coordinates of the face inside the green rectangle a counter is then started in order to wink to the person after twenty seconds. The sizes of the areas are chosen according to the size of the image.

If, for example, a person is located with coordinates of the white star (see image above), the robot will react in order to be able to get the face to the center of the green rectangle. It will have to lower the eyes and rotate them to the left.

The rotations are always made within limits of our motors.

OpenCV Solution

The purpose of this program is :

- capture images from a webcam

- analyze them and detect frontal or profile faces

- get their coordinates in pixels

- send those coordinates over the serial port on which the microcontroller is connected

The face detection process relies on OpenCV, which is an open-source library that provides functions for real-time computer vision.

For recognizing such complex objects as faces, OpenCV uses a statistical model also called classifier that is trained to find the sought object.

Training consists in giving the model positive samples, which are images containing the object and negative samples, which do not contain the object.

When the classifier is applied to an input image, a function scales the image at different locations and sizes and checks if it corresponds to the samples on which the classifier has been trained.

In our case as in many cases, OpenCV uses cascade classifiers, which consists in several simpler classifiers applied one after one until the object candidate is rejected or all stages are passed.

An extensive description of the working principle of classifier-based object detection is given on this website:

http://www.xavigimenez.net/blog/2010/02/face-detection-how-to-find-faces-with-opencv/Description of the program

Here is a general description of the code. However the code below is generously commented for more understanding.

Used Liraries

| iostream | for general i/o in the console |

| sstream | StringStream C++ class, used to convert a number to a char array. NOTE: the C sprintf() function was first used, but didn't show good results. |

| OpenCV libraries | objectdetect: functions for detecting objects with cascade classifiers highgui: functions for webcam capture and image display imgprog: fuctions for image processing (flip, color2gray, equalize histogram) |

| SerialClass | Found on arduino website, this is a generic , minimalistic and easy-to-use serial communication library. |

Global variables

A few variables are declared here that has to be used in both main() and detecAndDisplay()

Main

Filenames of the two cascade classifiers used are loaded.

An attempt is made to connect to the selected serial port, using the library described above.

The frame is captured from the webcam, its height and width are sent via the serial port, beggining with character 'w'.

A loop is started, ending when pressing key 'q', in which the following actions are done:

- flip the frame (only useful to check on the laptop display)

- run the detectAndDisplay function on the frame, which will modify values of the int array tab[] in which are stored x and y coordinates values of the current face.

- convert the x and y int values into char arrays (i.e. 256 will become the three characters '2' '5' '6')

- send those characters over the serial connection

- send the resolution again regularly

Then the memory allocated for the webcam capture is released, and the window displaying the capture is destroyed.

Detect and Display

This function has a frame and an int array as argument. It will first convert the input frame from colors to gray levels, equalize the histogram for better contrast and then perform object detection with the detectMultiScale method of the cascade object, using the classifiers loaded above. Note that the function is configured with the CV_HAAR_FIND_BIGGEST_OBJECT flag, which will force the detection of only one object, which makes the process a lot faster.

The detected faces dimensions and locations are put in a vector of rectangles, that is used afterwards to draw the corresponding rectangle on the colored input frame.

The coordinates of the center of the rectangle are extracted and put into the int array tab[].

If no faces are detected, the coordinates are both set to zero.

Finally, the colored input frame with the rectangles drawn over the faces is displayed in a window.

Front face detection Code (used)

#include "opencv2/objdetect/objdetect.hpp" #include "opencv2/highgui/highgui.hpp" #include "opencv2/imgproc/imgproc.hpp" #include "SerialClass.h" #include <iostream> #include <sstream> //serial port tu use (check on which arduino is connected in Arduino IDE) #define PORT_USED "COM9" using namespace std; using namespace cv; /** Function Headers */ void detectAndDisplay( Mat frame, int* tab); /*function that detects faces in the input frames, and puts its coordinates into the second argument, an int array*/ /** Global variables */ //make two cascade classifier with the files above CascadeClassifier frontal_cascade; //name of the window string window_name = "Capture - Face detection"; /*following variables are here to store if a face has beed detected in previous loop, so that other classifiers do not search for faces if one has already found one, for performance.*/ bool frontalsize=0; //was a frontal face detected in previous loop? int height=0; //height of the webcam image in pixels int width=0; //width of the webcam image in pixels int main( int argc, const char** argv ) { /*Note, either copy these two files from opencv/data/haarscascades to your current folder, or change these locations*/ String file1 = "haarcascade_frontalface_alt.xml"; // can also use .xml lbpcascade_frontalface, haarcascade_frontalface_alt, // haarcascade_mcs_eyepair_big,... int send_resolution=0; // kind of timer, is increased at each loop, and resolutionµ // is sent when it reaches 20 (for example) CvCapture* capture; //video capturing structure used to capture frames from the webcam Mat frame; //frame from the webcam, actualised at each loop int tab[2]= {0,0}; //int arrau where are put the x-y coordinates of the current face //Let's create a new serial connection cout<<"Connecting to port "<<PORT_USED<<"..."<<endl; Serial mySerial = Serial(PORT_USED); //check_port_availability if(!mySerial.IsConnected()) { //this function returns 1 if the connection to the port succeded, // 0 otherwise (check in SerialClass.h) cout <<"Port "<<PORT_USED<<" is not available!"<<endl; } else { cout <<"Port "<<PORT_USED<<" is connected."<<endl; } //load the cascade cassifier if( !frontal_cascade.load( file1 ) ) { cout<<"--(!)Error loading "<<file1<<endl; return -1; }; //read the video stream capture = cvCaptureFromCAM( 11 ); //(-1) if there is only one camera available, //otherwise check documentation here: //http://www710.univ-lyon1.fr/~bouakaz/OpenCV-0.9.5/docs/ref/OpenCVRef_Highgui.htm#decl_cvCaptureFromCAM //retreive height and width of the webcam height = cvGetCaptureProperty(capture,CV_CAP_PROP_FRAME_HEIGHT); width = cvGetCaptureProperty(capture,CV_CAP_PROP_FRAME_WIDTH); cout<<"width: "<<width<<endl; cout<<"height: "<<height<<endl; /*put values of height and width into strings stringstreams that will contain the numbers to send via serial port. e.g. {'1','2','3'} for the int value 123*/ stringstream wstring; wstring<<width; stringstream hstring; hstring<<height; /*begin this sequence with "w" to tell the microcontroller that we're sending width and height if the resolution has 4 digits, the code needs to be adapted here and in the arduino board (4 instead of 3) but larger resolution image will take more time to process and coordinates will be sent slower*/ mySerial.WriteData((char*)"w",1); mySerial.WriteData((char*)wstring.str().c_str(),3); //.str() to return a string, .c_str for the string to return // a char array, and then cast it into a char* mySerial.WriteData((char*)hstring.str().c_str(),3); if(capture) { char key=0; //key pressed. see below.. while( (char)key != 'q' ) { //quit with 'q' key frame = cvQueryFrame(capture); flip( frame, frame, 1 ); //mirror the frame //Apply the classifier to the frame and get face //coordinates in "tab[0]" and "tab[1]" if( !frame.empty() ) { detectAndDisplay(frame,tab); } else { printf(" --(!) No captured frame -- Break!"); break; } //get coordinate values from "tab[0]" and "tab[1]" //and put them into char strings stringstream xstring; xstring<<tab[0]; stringstream ystring; ystring<<tab[1]; //if coordinates are zero, the commands above doesn't work, //so we put ourself a 0 in the strings if(tab[1]==0) ystring<<"000"; if(tab[0]==0) xstring<<"000"; cout<<"Sending "; cout<<"x:"<<tab[0]; cout<<" y:"<<tab[1]; cout<<" to serial port "<<PORT_USED<<endl; //sending coordinates to serial port as char array. //i.e. '1','2','3' for 123 mySerial.WriteData((char*)"x",1); mySerial.WriteData((char*)xstring.str().c_str(),3); mySerial.WriteData((char*)"y",1); mySerial.WriteData((char*)ystring.str().c_str(),3); //Here I send again the resolution of the camera every 20 loops //so that the microcontroller program can restart in case of reset if(send_resolution==20) { cout<<"width: "<<width<<endl; cout<<"height: "<<height<<endl; mySerial.WriteData((char*)"w",1); mySerial.WriteData((char*)wstring.str().c_str(),3); //.str() to return a string, .c_str for the string to return // a char array, and then cast it into a char* mySerial.WriteData((char*)hstring.str().c_str(),3); send_resolution=0; } send_resolution++; //retreive key pressed key = waitKey(10); } } //free memory cvReleaseCapture( &capture ); cvDestroyWindow( window_name.c_str() ); return 0; } void detectAndDisplay(Mat frame,int* tab) { //vector of retangles that will contain the face vector<Rect> frontal; vector<Rect> left; vector<Rect> right; //initialize new emptyframe Mat frame_gray; //remove colors from "frame" and put it into "frame_gray" cvtColor( frame, frame_gray, CV_BGR2GRAY ); //equalize histogram of "frame_gray" and put it back into "frame_gray" //equalizeHist( frame_gray, frame_gray ); //-- Detect frontal faces frontal_cascade.detectMultiScale(frame_gray, frontal, 1.3, 4, CV_HAAR_FIND_BIGGEST_OBJECT, Size(20, 20)); frontalsize=frontal.size(); //faces.size() returns the number of faces. When 0 faces, // it does not enter the following 'for' loop //5th argument can be several things: //CV_HAAR_DO_CANNY_PRUNING //CV_HAAR_FIND_BIGGEST_OBJECT| //CV_HAAR_DO_ROUGH_SEARCH // more info here http://ubaa.net/shared/processing/opencv/opencv_detect.html //if there are faces, we enter this for loop for(unsigned int i = 0; i < frontal.size() ; i++ ) { //two points are needed to draw a rectangle. let's take them into the vector of faces Point Lowleft(frontal[i].x,frontal[i].y); Point UpRight(frontal[i].x + frontal[i].width,frontal[i].y + frontal[i].height); //make the rectangle and draw it into "frame" rectangle( frame, Lowleft, UpRight, Scalar(0,0,255), 2, 8, 0 ); //save coordinates of the center of the rectangle tab[0]=frontal[i].x+(frontal[i].width>>1); tab[1]=frontal[i].y+(frontal[i].height>>1); cout<<"Frontal face! "; } //only if no faces: if(frontal.size()==0) { tab[0]=0; tab[1]=0; cout<<"No faces. "; } //display the colored frame with the added rectangle imshow( window_name, frame ); } |

Front and profile face detection Code

#include "opencv2/objdetect/objdetect.hpp" #include "opencv2/highgui/highgui.hpp" #include "opencv2/imgproc/imgproc.hpp" #include "SerialClass.h" #include <iostream> #include <sstream> //serial port tu use (check on which arduino is connected in Arduino IDE) #define PORT_USED "COM9" using namespace std; using namespace cv; /** Function Headers */ void detectAndDisplay( Mat frame, int* tab); //function that detects faces //in the input frames, and puts its coordinates into the second argument, an int array /** Global variables */ //make two cascade classifier with the files above CascadeClassifier frontal_cascade; CascadeClassifier profile_cascade; //name of the window string window_name = "Capture - Face detection"; /*following variables are here to store if a face has beed detected in previous loop, so that other classifiers do not search for faces if one has already found one, for performance.*/ bool frontalsize=0; //was a frontal face detected in previous loop? bool leftsize=0; //was a left profile detected in previous loop? bool rightsize=0; //was a right profile detected in previous loop? int height=0; //height of the webcam image in pixels int width=0; //width of the webcam image in pixels int main( int argc, const char** argv ) { /* Note, either copy these two files from opencv/data/haarscascades to your current folder, or change these locations*/ String file1 = "haarcascade_frontalface_alt.xml"; // can also use .xml lbpcascade_frontalface, haarcascade_frontalface_alt, haarcascade_mcs_eyepair_big,... String file2 = "haarcascade_profileface.xml"; int send_resolution=0; // kind of timer, is increased at each loop, and resolution is sent //when it reaches 20 (for example) CvCapture* capture; //video capturing structure used to capture frames from the webcam Mat frame; //frame from the webcam, actualised at each loop int tab[2]= {0,0}; //int arrau where are put the x-y coordinates of the current face //Let's create a new serial connection cout<<"Connecting to port "<<PORT_USED<<"..."<<endl; Serial mySerial = Serial(PORT_USED); //check_port_availability if(!mySerial.IsConnected()) { //this function returns 1 if the connection to the port succeded, 0 otherwise (check in SerialClass.h) cout <<"Port "<<PORT_USED<<" is not available!"<<endl; } else { cout <<"Port "<<PORT_USED<<" is connected."<<endl; } //load the cascade cassifier if( !frontal_cascade.load( file1 ) ) { cout<<"--(!)Error loading "<<file1<<endl; return -1; }; if( !profile_cascade.load( file2 ) ) { cout<<"--(!)Error loading "<<file2<<endl; return -1; }; //read the video stream capture = cvCaptureFromCAM( 11 ); //(-1) if there is only one camera available, otherwise check documentation here: //http://www710.univ-lyon1.fr/~bouakaz/OpenCV-0.9.5/docs/ref/OpenCVRef_Highgui.htm#decl_cvCaptureFromCAM //retreive height and width of the webcam height = cvGetCaptureProperty(capture,CV_CAP_PROP_FRAME_HEIGHT); width = cvGetCaptureProperty(capture,CV_CAP_PROP_FRAME_WIDTH); cout<<"width: "<<width<<endl; cout<<"height: "<<height<<endl; /*put values of height and width into strings stringstreams that will contain the numbers to send via serial port. e.g. {'1','2','3'} for the int value 123*/ stringstream wstring; wstring<<width; stringstream hstring; hstring<<height; //begin this sequence with "w" to tell the microcontroller that we're sending width and height //if the resolution has 4 digits, the code needs to be adapted here and in the arduino board (4 instead of 3) //but larger resolution image will take more time to process and coordinates will be sent slower mySerial.WriteData((char*)"w",1); mySerial.WriteData((char*)wstring.str().c_str(),3); //.str() to return a string, .c_str for the string to return //a char array, and then cast it into a char* mySerial.WriteData((char*)hstring.str().c_str(),3); if(capture) { char key=0; //key pressed. see below.. while( (char)key != 'q' ) { //quit with 'q' key frame = cvQueryFrame(capture); flip( frame, frame, 1 ); //mirror the frame //Apply the classifier to the frame and get face coordinates in "tab[0]" and "tab[1]" if( !frame.empty() ) { detectAndDisplay(frame,tab); } else { printf(" --(!) No captured frame -- Break!"); break; } //get coordinate values from "tab[0]" and "tab[1]" and put them into char strings stringstream xstring; xstring<<tab[0]; stringstream ystring; ystring<<tab[1]; //if coordinates are zero, the commands above doesn't work, so we put ourself a 0 in the strings if(tab[1]==0) ystring<<"..0"; if(tab[0]==0) xstring<<"..0"; cout<<"Sending "; cout<<"x:"<<tab[0]; cout<<" y:"<<tab[1]; cout<<" to serial port "<<PORT_USED<<endl; //sending coordinates to serial port as char array. i.e. '1','2','3' for 123 mySerial.WriteData((char*)"x",1); mySerial.WriteData((char*)xstring.str().c_str(),3); mySerial.WriteData((char*)"y",1); mySerial.WriteData((char*)ystring.str().c_str(),3); //Here I send again the resolution of the camera every 20 loops //so that the microcontroller program can restart in case of reset if(send_resolution==20) { cout<<"width: "<<width<<endl; cout<<"height: "<<height<<endl; mySerial.WriteData((char*)"w",1); mySerial.WriteData((char*)wstring.str().c_str(),3); //.str() to return a string, .c_str for the string to return // a char array, and then cast it into a char* mySerial.WriteData((char*)hstring.str().c_str(),3); send_resolution=0; } send_resolution++; //retreive key pressed key = waitKey(10); } } //free memory cvReleaseCapture( &capture ); cvDestroyWindow( window_name.c_str() ); return 0; } void detectAndDisplay(Mat frame,int* tab) { //vector of retangles that will contain the face vector<Rect> frontal; vector<Rect> left; vector<Rect> right; //initialize new emptyframe Mat frame_gray; //remove colors from "frame" and put it into "frame_gray" cvtColor( frame, frame_gray, CV_BGR2GRAY ); //equalize histogram of "frame_gray" and put it back into "frame_gray" //equalizeHist( frame_gray, frame_gray ); //-- Detect frontal faces if(rightsize==0 and leftsize==0) { //only if neither right nor left profile have beed previously detected frontal_cascade.detectMultiScale( frame_gray, frontal, 1.2, 4,0, Size(20, 20)); frontalsize=frontal.size(); } //-- Detect left profiles if(frontalsize==0 and rightsize==0) { //only if neither frontal face nor right profile has been previously detected profile_cascade.detectMultiScale( frame_gray, left, 1.2, 3,0, Size(20, 20)); leftsize=left.size(); } //-- Detect right profiles if(frontalsize==0 and leftsize==0) { //first flip the frame to detect right profiles using the same classifier as for left profiles flip( frame_gray, frame_gray, 1 ); profile_cascade.detectMultiScale( frame_gray, right, 1.2, 3,0, Size(20, 20)); rightsize=right.size(); } //faces.size() returns the number of faces. When 0 faces, it does not enter the following 'for' loop //5th argument can be several things: //CV_HAAR_DO_CANNY_PRUNING //CV_HAAR_FIND_BIGGEST_OBJECT| //CV_HAAR_DO_ROUGH_SEARCH // more info here http://ubaa.net/shared/processing/opencv/opencv_detect.html //if there are faces, we enter this for loop for(unsigned int i = 0; i < frontal.size() ; i++ ) { //two points are needed to draw a rectangle. let's take them into the vector of faces Point Lowleft(frontal[i].x,frontal[i].y); Point UpRight(frontal[i].x + frontal[i].width,frontal[i].y + frontal[i].height); //make the rectangle and draw it into "frame" rectangle( frame, Lowleft, UpRight, Scalar(0,0,255), 2, 8, 0 ); //save coordinates of the center of the rectangle tab[0]=frontal[i].x+(frontal[i].width>>1); tab[1]=frontal[i].y+(frontal[i].height>>1); cout<<"Frontal face! "; } for(unsigned int i = 0; i < left.size() ; i++ ) { //two points are needed to draw a rectangle. let's take them into the vector of faces Point Lowleft(left[i].x,left[i].y); Point UpRight(left[i].x + left[i].width,left[i].y + left[i].height); //make the rectangle and draw it into "frame" rectangle( frame, Lowleft, UpRight, Scalar(0,0,255), 2, 8, 0 ); //save coordinates of the center of the rectangle tab[0]=left[i].x+(left[i].width>>1); tab[1]=left[i].y+(left[i].height>>1); cout<<"Left profile! "; } for(unsigned int i = 0; i < right.size() ; i++ ) { /*two points are needed to draw a rectangle. let's take them into the vector of faces here, the x-position which is the low left corner of the rectangle is tweaked a bit because the detection occured on a flipped frame*/ Point Lowleft(width-right[i].x-right[i].width,right[i].y); Point UpRight(width-right[i].x-right[i].width + right[i].width,right[i].y + right[i].height); //make the rectangle and draw it into "frame" rectangle( frame, Lowleft, UpRight, Scalar(0,0,255), 2, 8, 0 ); //save coordinates of the center of the rectangle tab[0]=width-right[i].x-right[i].width+(right[i].width>>1); tab[1]=right[i].y+(right[i].height>>1); cout<<"Right profile! "; } //only if no faces: if(frontal.size()==0 and right.size()==0 and left.size()==0) { tab[0]=0; tab[1]=0; cout<<"No faces. "; } //display the colored frame with the added rectangle imshow( window_name, frame ); } |

Serial communication

Once a face has been recognized on the computer, it is necessary to send the different coordinates to the Arduino board and to move accordingly.

The arduino program has a built-in serial communication device. The problem here was that we wrote the facial recognition in C++ and we had to find

a C++ library for Windows, allowing us to send information over a serial port. On the internet we found serial classes that enabled communication with the arduino

board.The information that has been sent, is then stored in a buffer memory until the Arduino is told to read a value.

On the following link you can find the used class: http://www.arduino.cc/playground/Interfacing/CPPWindows

Arduino code

The program uploaded to the Arduino board is divided in 2: first we have to read the incoming data that has been transmitted via the serial connection and then we compute the movements that the servo motors have to perform.

The data is read in the Void 'getValuesFromSerial'. The first thing the OpenCV program sends is the resolution of the webcam. The Arduino has a buffer where all incoming serial data is stored, one byte at a time since only sequences of one byte can be sent through the serial communication. When this byte is read with 'serial.read', it is deleted from this buffer.

First a check is done if the buffer is empty. The subroutine to read the data starts when the byte that's been read corresponds to 'w'. Then the width and height are read, but one byte at a time, so a computation has to be performed to get the right values. The first byte that is read after 'w' is the 3rd digit of the width so this has to be multiplied with 100. Then the following byte is the 2nd digit, so this has to be multiplied with 10 and has to be added to the first number. The rest of the computation is done likewise. The '-48' in the computation is due to the conversion from ASCII.

After that, the coordinates send by the facial recognition software have to be read. A check is performed if the last byte that has been read is either an 'x' or a 'y'. The information is sent in the following form:

'x' 'x-coordinate digit 3' 'x digit 2' 'x digit 1''y' 'y digit 3' 'y digit 2' 'y digit 1' repeating

For example, if we want to send the following coordinates: x123 y456 x24 y5, it'll look like this:

x123y456x.24y..5

A dot represents a 0 value.

If there is no face recognized by the OpenCV software, it will send:

x..0y..0

If the value read is an 'x', we know that the previous values were the y-coordinates and vice versa. If the value is a number between 0 and 9, it is stored in a array called 'numbers'. If the value read corresponds to a dot, it's simply discarded and not saved in the array.

This loop continues until all values of the x- or y- coordinates are known, then a number is made of the values in the numbers array. If it's a number with 3 digits, the 3rd digit is multiplied with 100 plus the 2nd digit times 10 plus the 1st digit. If it's a 2 digit number, the 2nd digit is multiplied with 10 and the 1st digit is added. If it's a one digit coordinate, the only value in the numbers array corresponds to the 1st digit. This is done for both the x- and y-coordinates. Then everything is reset to 0 and the new incoming data is read.

The void loop used to give signals to the servomotors contains one main loop that allows the motors to move every 80ms in order to have a sharp image to recognize faces. This loop is divided in three parts.

The first one is to go back to the neutral position. This is done when no faces are detected for 8 seconds. The condition is fulfilled when the computer sends zeros as face coordinates. We assume this to be only the case when there is no face detected.

The second loop analyses if the face's coordinates are inside the center rectangle. If the person stood for two seconds inside the rectangle, the eyes go to neutral position and the neck turns to compensate this last rotation. Another loop at the same time counts if the person stood for four seconds in front of the webcam in order to start winking. At that point the time is remembered to avoid winking again, this for 20sec.

The third loop contains the eyeball and neck movements. The entire code works as a closed feedback loop: the face position will be compared to see if it's inside the rectangle or not. The motor will either move to the left if the face's coordinate is on the left of the rectangle or to the right in the opposite case. Once the coordinates are inside the rectangle we do not move in order to avoid oscillations. The same reasoning is done for the upward and downward motion of the eye-ball. The left and right rotation of the neck occurs when the eyes are either at their maximal or minimal value. In order to smoothen the motion of the neck, a small delay is added between each increment of the neck's servomotor. The neck is configured to move at constant speed by steps of 10 degrees. Once the neck rotates the eyes go to their neutral position. A possible improvement would be to introduce an increasing, stationary and then decreasing velocity curve of the neck's motion by playing on the length of the delay between each increment.

The time it takes for the mannequin to blink is randomized between 6 and 10 seconds. A random number between 6000 and 10000 milliseconds is created and when a timer reaches this number, it activates both motors that control the eyelashes. Then, the timer is reset.

Code

/* Mannequin robot 22 december 2012 Made by: Francois Bronchart Steven Depue Nymfa Noppe Arnaud Ronse De Craene Dieter van Isterdael Benjamin Vanhemelryck A webcam is inserted inside one of the mannequin's eyes. This eye can rotate in the x- and y- direction. Also the neck is allowed to rotate left or right. The webcam's view is sent to a computer to analyze the images and detect faces. The purpose of this program is to get the face coordinates from a computer, to read and use them. The different servo's will be moved in order to center the person's face in a center rectangle. Once the face is inside for a certain time the robot will wink to the person standing in front of the manneqin. */ #include <Servo.h> //add libraries for servo and Liquid cristal screen #include <LiquidCrystal.h> #define RECT_WIDTH 40 //rectangle size in which we want to position the face #define RECT_HEIGHT 40 #define NECKSTEP 10 // steps with which the neck will move //------reference values (neutral position) for the servos--------- #define YREF 100 #define XREF 80 #define LIDREF 80 #define NECKREF 65 //------min-max values for the servos-------- #define XMAX 130 #define XMIN 50 #define YMAX 115 #define YMIN 75 #define NECKMAX 170 #define NECKMIN 10 //LiquidCrystal lcd(8,9,10,11,12,13); //compact arrangement //----define the servos---- Servo servox; Servo servoy; Servo servolid; Servo neck; //------variables for the serial communication------ byte incomingByte; //byte (character) incoming int xfinished=0; //0 when no x value finished, 1 for 0-9, 2 for 10-99, 3 for 100-999 int yfinished=0; //0 when no y value finished, 1 for 0-9, 2 for 10-99, 3 for 100-999 byte numbers[3]= { '0','0','0'}; //stores the 3 last incoming bytes int i=2; //position in the array : numbers[i] //also counts the 1,2 or 3 that will go in the two xfinished and yfinished int facex=0; //x coordinate of the face int facey=0; //y coordinate of the face int w=0; //width of the viewed screen by the webcam int h=0; // height of the viewed screen by the webcam //-----initial values----- int tempx=80; int tempy=90; int neckx=90; int tempneck=0; //------timer variables---------- //long timertemp=0; long starttime=0; //timers for to avoid too quick rotations. Leave time to sharpen the //view of the webcam long tdelay=0; long timerfacetemp=0; //timers for reset of all the servo's if no face is detected long timerface=0; long timer=0; //timer for the winking. Reset when we move of when no face is detected long timer_wink=0; //save time when the face is inside the rectangle long timer_centering; //time a person is inside the rectangle to reset the eyes long didwink=0; //timer if we just winked long tempblink=0; //timers necessary to save time to blink every random time long timer_blink=0; void getValuesFromSerial(); void setup() { Serial.begin(9600); servox.attach(7,600,2400); // attaches the servo on pin 7 to the servo object servoy.attach(6,600,2400); servolid.attach(2,600,2400); neck.attach(4); servox.write(XREF); //Start from the reference values for all the servos servoy.write(YREF); neck.write(NECKREF); starttime=millis(); //timer for motor movements } void loop() { servolid.write(80); //makes sure the eyelid is still lifted up getValuesFromSerial(); //get the coordinates of the face from the computer tdelay=millis(); //timer necessary for motor movement /*lcd.setCursor(0,0); //printing on the lcd the face coordinates, the neck coordinate and //tempy. lcd.print("facex:"); lcd.print(facex,DEC); lcd.print(" "); lcd.setCursor(0,1); lcd.print("facey:"); lcd.print(facey,DEC); lcd.print(" "); if (neckx>=100){ lcd.setCursor(11,0); lcd.print(neckx,DEC); lcd.setCursor(17,0); lcd.print("00"); } if (neckx<100){ lcd.setCursor(17,0); lcd.print(neckx,DEC); lcd.setCursor(11,0); lcd.print("000"); } lcd.setCursor(13,0); lcd.print(tempy,DEC);*/ if ((tdelay-starttime)>80) { //delay necessary between to motor movements //for a clear webcam image to recognize faces if(w!=0 && h!=0) { //motor movement allowed only if communication is established //with the computer if(facex==0 && facey==0){ //First main loop. No faces are found timerface=millis(); if ((timerface-timerfacetemp)>8000) { //allowed to go to neutral position if no //face is found during 8 seconds tempx=XREF; tempy=YREF; neckx=NECKREF; servox.write(tempx); servoy.write(tempy); neck.write(neckx); delay(2); timerfacetemp=timerface; //save time for next time we go to neutral position } timer=0; //set timer to zero for winking } else if (facex>(w-RECT_WIDTH)/2 && facex<(w+RECT_WIDTH)/2 && facey>(h-RECT_HEIGHT)/2 && facey<(h+RECT_HEIGHT)/2){ //Second main loop if the coordinates of the face are inside the central rectangle if (timer!=0) { //has already been in the rectangle timer_wink=millis(); timer_centering=millis(); if ((timer_centering-timer)>2000){ // When not moving since 2s, the neck centers on //the person instead of the eyes. In such way the eyes return in their neutral position. while(tempx>XREF){ tempneck+=1; neck.write(tempneck); tempx-=1; servox.write(tempx); delay(30); } while(tempx<XREF){ tempneck-=1; neck.write(tempneck); tempx+=1; servox.write(tempx); delay(30); } timer=0; //set timer to zero for winking } if ((timer_wink-timer)>4000 && (timer_wink-didwink)>20000) { /*wink only when 4 sec in the rectangle and wink every 20sec (not every 4 sec if person stays in front of the webcam).*/ servolid.write(70); //action motors for winking delay(5); servolid.write(150); delay(100); timer=0; //reset from winking didwink=timer_wink; //save the time to avoid winking every 4 sec } } else { //Save the time as it is the first time we enter the central rectangle timer=millis(); } } else { //Third main loop. When faces are detected and they are not in the defined rectangle //the eyes and the face has to move. timerfacetemp=millis(); if (facex<(w-RECT_WIDTH)/2 && tempx>XMIN){ //rotation to the left in the x-direction if not on its minimal value tempx-=1; servox.write(tempx); } else if (facex>(w+RECT_WIDTH)/2 && tempx<XMAX) { //rotation to the righ in the x-direction if not on its maximal value tempx+=1; servox.write(tempx); } else if (neckx>NECKMIN && tempx==XMIN) { //if the eyes have moved to their minimal value and the neck is not to its minimal //value then we rotate the neck tempneck=neckx-NECKSTEP; //step with which the neck will move while (neckx!=tempneck) { //neck rotation aslong the final value is not obtained. neckx-=1; neck.write(neckx); if (tempx!=XREF) //as the neck turns the eyes are slowly recentered to their reference values { tempx+=1; servox.write(tempx); } if (tempy>YREF) { tempy-=1; servoy.write(tempy); } if (tempy<YREF){ tempy+=1; } delay(30); //necessary to avoid brusk movements } } else if (neckx<NECKMAX && tempx==XMAX) { //if the eyes have rotated to their maximal value and the neck is not to its maximal // value then we rotate the neck tempneck=neckx+NECKSTEP; //step with which the neck will move while (neckx!=tempneck) { //neck rotation aslong the final value is not obtained. neckx+=1; neck.write(neckx); if (tempx!=XREF) //as the neck turns the eyes are slowly recentered to their reference values { tempx-=1; servox.write(tempx); } if (tempy>YREF) { tempy-=1; servoy.write(tempy); } if (tempy<YREF){ tempy+=1; } delay(30); } } if (facey<(h-RECT_HEIGHT)/2 && tempy<YMAX) { //upward rotation in the y-direction if not on its maximal value tempy+=1; servoy.write(tempy); } else if (facey>(h+RECT_HEIGHT)/2 && tempy>YMIN) { //upward rotation in the y-direction if not on its minimal value tempy-=1; servoy.write(tempy); } timer=0; //reset timer because the face wasn't in the rectangle timerfacetemp=millis(); //update timerfacetemp to avoid recentering immediately if no face is detected } } /*if(timer_blink==0) { //random for blinking. Takes a time between 6 and 10 seondes timer_blink=random(6000,10000); //random } else{ tempblink=millis(); if (tempblink>=timer_blink) { //sees if the time we waited before blinking is long enough servolid.write(70); //action motors for blinking delay(5); servolid.write(150); delay(100); timer_blink=0; } }*/ starttime=tdelay; //update starttime to avoid motor rotation in order to have a clear image } } void getValuesFromSerial() { if (Serial.available() > 0) { // read the incoming byte: incomingByte = Serial.read(); if(incomingByte=='w') { while (Serial.available()<=0) { }; int c=100*(Serial.read()-48); while (Serial.available()<=0) { }; int d=10*(Serial.read()-48); while (Serial.available()<=0) { }; int u=(Serial.read()-48); w=c+d+u; while (Serial.available()<=0) { }; c=100*(Serial.read()-48); while (Serial.available()<=0) { }; d=10*(Serial.read()-48); while (Serial.available()<=0) { }; u=(Serial.read()-48); h=c+d+u; } if(incomingByte=='x') yfinished=i; //if I read x, then the previous value was a y of length "i" else if(incomingByte=='y') xfinished=i; //if I read y, then the previous value was an x of length "i" else if(incomingByte>='0' && incomingByte<='9') { /*if incoming byte is neither an x nor a y, and if it is a number from 0 to 9then we store it in the array numbers[] and we increase its index. We could do "-48" directly here but an array of three INT takes 2*more memory than an array of three CHAR*/ numbers[i]=incomingByte; i++; } if(xfinished>0 && yfinished==0) { //when a new y appears, the x is finished, so set the cursor to 2,1 in order to display the x //according to the number of characters (base 10) of the number received, //compute the value from the 1,2 or 3 characters received //and display it on the lcd (optional) if(xfinished==3) { facex=100*(numbers[0]-48)+10*(numbers[1]-48)+(numbers[2]-48); } else if(xfinished==2) { facex=10*(numbers[0]-48)+(numbers[1]-48); } else { facex=(numbers[0]-48); } // x coordinate has been computed, stored and displayed, // we can now reset all characters in the array numbers[] to zero numbers[2]='0'; numbers[1]='0'; numbers[0]='0'; //reset position in the array numbers[] i=0; //new x value has yet to be received xfinished=0; } if(yfinished>0 && xfinished==0) { //when a new x appears, the y is finished, so set the cursor to 2,2 in order to display the y if(yfinished==3) { facey=100*(numbers[0]-48)+10*(numbers[1]-48)+(numbers[2]-48); } else if(yfinished==2) { facey=10*(numbers[0]-48)+(numbers[1]-48); } else { facey=(numbers[0]-48); } numbers[2]='0'; numbers[1]='0'; numbers[0]='0'; i=0; yfinished=0; } } } |